Monday, August 7, 2017

2017-08-03: Ai Intern Derek Goddeau Brings Robot Arm To Life

It's one of the only robots in the Autonomy Incubator that doesn't roll or fly, but it's still just as fun to watch: it's the robotic arm we received from DARPA (Defense Advanced Research Projects Agency), and Derek Goddeau is in charge of making it move.

"Right now, it does all the path planning on its own, so I can just tell it 'move to xyz' and it does it without me giving it instructions step by step," he explained.

In the video, Derek has the arm generate random positions and move between them gracefully, without any part of the arm colliding with another part. Think of it like a very slow game of Snake. Once he and the arm work out how to do that reliably, it can start moving a little faster. Then, it can try performing tasks in real time.

"Eventually, it will be able to identify objects on its own and put them where they need to be," he said.

Derek comes to the Ai from the Computer Science department of Old Dominion University in Norfolk, where he's currently a senior. His interest in robots springs out of the time he spent serving in the Navy, where he worked as an avionics technician.

"I worked communication, navigation, and electronic warfare systems," he said. "I was in the Navy for six years, and then I left because I wanted to go to school."

Because of his career in the defense side of computer science, Derek enjoys concentrating on the security of network-connected devices that aren't computers in the traditional sense— a category that includes robots, like the ones in the Ai.

"I really like the security of weird things, like cell phones, and robots are one of those weird things. There aren't a lot of people doing that," he said.

In fact, Derek was part of a project at ODU last semester that allows people to detect when someone in the area is using a "Stingray", a device that mimics a cell phone tower and collects identifying information from all of the phones in its radius. If you're into cybersecurity, I'd highly recommend checking out the project's website here.

In the meantime, Derek's internship in the Ai has been a success, both for him and for the lab.

"I learned a whole lot about robotics, and Gazebo and ROS, definitely," he said.

Friday, July 21, 2017

2017-07-21: Autonomy Incubator High School Volunteers Compete in Rover Challenge

This summer brought a flood of high school volunteers to the Autonomy Incubator; between the contingent of students from the Governor's School and the kids volunteering through NASA connections, we hosted ten students from the Hampton Roads area. Originally, Ai head Danette Allen set them all to work on the same task: build a rover to carry a 25lb robotic arm.

However, division soon occurred within the group. One contingent wanted to use a commercial RC car base for maximum speed, while the other argued for building a tank-like base with six wheels for agility. Rather than making all of them agree on a design, Danette had a better idea.

"Do both," she said. "Split into teams, and at the end, we'll have a competition."

So, on the final day of the Governor's School students' stay at the Ai, volunteers Xuan Nguyen and Payton Heyman built an obstacle course and we had a robot rumble.

|

| Xuan and Payton crafted the track from elements around Building 1222 |

Thursday, July 20, 2017

2017-07-19: Autonomy Incubator Intern Abigail Hartley Opens Up a New Chapter

by Payton Heyman, a social media specialist-in-training

Abbey Hartley, an adored member of the Autonomy Incubator family, joined the team back in 2015 as a social media intern, but will soon be leaving to further her career after getting a new job with CBS.

Abbey grew up in Lake Wylie, South Carolina, and graduated just last year from Dartmouth with a degree in English.

“I sort of stumbled into the job,” she explained, talking about how she first started at the Ai. “My mother is good friends with Dr. Danette Allen, and she had told me to email her at the end of my junior year.”

She ended up becoming the first person to ever formally work solely in the social media field here, and thanks to her, it has come a long way. When she first started working in the summer of 2015, she only made daily Twitter updates and blog posts with the occasional short video made with iMovie, as the position was very new.

When intern Kastan Day joined her in the summer of 2016, they made a great team and elevated the social media presence even more. Better software became available, and within the next year the Autonomy Incubator Instagram, YouTube channel, and Facebook came to life, opening up a realm of creative ideas.

“[The job] was great, I loved it! I was the only person I knew interning at NASA.” Abbey expressed about coming back for a second summer.

“I’m always learning here and there are rarely opportunities like this for people like me.” She added.

After returning as a NASA intern four separate times, she decided to begin applying for jobs outside of the Langley Research Center, and has just recently scored the position of a multimedia journalist for CBS in Washington D.C. Here she will be a reporter for connectingvets.com and is beyond thrilled for the opportunity.

Her grandpa was a nuclear physicist during the Cold War and her brother anticipates on joining the air force as a flight surgeon following the completion of medical school, so she has a lot of connections with the subject matter and cares deeply about it.

The company mainly hires veterans and people who have experience in working for the federal government. Since Abbey has three years under her belt and a family history of employment in the military, she fit the job perfectly.

“There are a lot of things out there for veterans, but they don’t even know about it,” Abbey explained, “[the company] has really good things going for it.”

Her potential last day here at the Ai is the eleventh of August, but she continues to do great things in the office every single day that passes.

“I’m really going to miss the people here. Everyone here is my friend, and it’s going to be really hard to leave what has become my family from the only job I’ve ever really had. Hopefully, I can come back and visit somehow,” she said.

Extraordinary things are approaching for Abigail Hartley, but her fun spirit, determination, and strong work ethic will be missed greatly. Her contributions here have been remarkable, as she has, without a doubt, left an immense footprint on the Ai. We all know she is destined for success and we are looking forward to seeing her start a new chapter in her life next month. God speed, Abbey!

|

| Abbey writing a post for the Autonomy Incubator Blog. |

Abbey Hartley, an adored member of the Autonomy Incubator family, joined the team back in 2015 as a social media intern, but will soon be leaving to further her career after getting a new job with CBS.

Abbey grew up in Lake Wylie, South Carolina, and graduated just last year from Dartmouth with a degree in English.

“I sort of stumbled into the job,” she explained, talking about how she first started at the Ai. “My mother is good friends with Dr. Danette Allen, and she had told me to email her at the end of my junior year.”

She ended up becoming the first person to ever formally work solely in the social media field here, and thanks to her, it has come a long way. When she first started working in the summer of 2015, she only made daily Twitter updates and blog posts with the occasional short video made with iMovie, as the position was very new.

When intern Kastan Day joined her in the summer of 2016, they made a great team and elevated the social media presence even more. Better software became available, and within the next year the Autonomy Incubator Instagram, YouTube channel, and Facebook came to life, opening up a realm of creative ideas.

“[The job] was great, I loved it! I was the only person I knew interning at NASA.” Abbey expressed about coming back for a second summer.

“I’m always learning here and there are rarely opportunities like this for people like me.” She added.

|

| Abbey hand flying a drone for a research project back in February. |

Her grandpa was a nuclear physicist during the Cold War and her brother anticipates on joining the air force as a flight surgeon following the completion of medical school, so she has a lot of connections with the subject matter and cares deeply about it.

The company mainly hires veterans and people who have experience in working for the federal government. Since Abbey has three years under her belt and a family history of employment in the military, she fit the job perfectly.

“There are a lot of things out there for veterans, but they don’t even know about it,” Abbey explained, “[the company] has really good things going for it.”

Her potential last day here at the Ai is the eleventh of August, but she continues to do great things in the office every single day that passes.

“I’m really going to miss the people here. Everyone here is my friend, and it’s going to be really hard to leave what has become my family from the only job I’ve ever really had. Hopefully, I can come back and visit somehow,” she said.

Extraordinary things are approaching for Abigail Hartley, but her fun spirit, determination, and strong work ethic will be missed greatly. Her contributions here have been remarkable, as she has, without a doubt, left an immense footprint on the Ai. We all know she is destined for success and we are looking forward to seeing her start a new chapter in her life next month. God speed, Abbey!

2017-07-19: Autonomy Incubator Aids in Coordinated Ozone Measurement Effort

In a very cool side quest to the OWLETS mission, the Autonomy Incubator launched a Hive UAV to measure atmospheric concentrations of ozone while a balloon and a C-23 Sherpa aircraft from Wallops simultaneously collected similar measurements.

The Sherpa took off from NASA Wallops Flight Facility on the Eastern Shore, then flew south across the Chesapeake bay and performed a spiral above the CBBT island where OWLETS takes measurements. Then, it flew over to NASA Langley and performed a spiral while researchers on the ground launched a weather balloon and flew a Hive loaded with sensors into the air to take samples.

By taking the same measurements in multiple ways, NASA can collect correlative data about the composition of our atmosphere. This is the first time the Autonomy Incubator has been part of such a massive coordinated flight campaign, and it was thrilling for all of us.

Stay tuned for more about OWLETS, including the science from OWLETS PI, Tim Berkoff.

Tuesday, July 18, 2017

2017-07-18: Volunteers Compete for the Best Rover

by Payton Heyman, Ai social media specialist-in-training

Whose rover will reign victorious here at the Autonomy Incubator? Seven high school students, some volunteers and some part of a residential governor's school program, are competing against each other in two opposing groups to answer that question.

The goal of the competition is to successfully build a mobile rover in order to mount a 25-pound robotic arm on the top. This arm will be capable of moving a 7-8 pound wooden truss; however, the rover must be stable and not tip when the arm extends.

The first step of the project was the design, in which they focused on the creation of multiple 3D digital mock-ups to help decide on a final model. Different components were then ordered to start their task. Most were commercial, off-the-shelf parts, but some were ordered from a robotics competition vendor. The majority of them came in a kit where all of the pieces, screws, and instructions are included, allowing them to easily assemble the rover with only some slight modifications needed for additional equipment.

Then, each team had to wire up all of the electronics on the rover. Luckily this was quite simple since the controller board that is being used has several components in one. For example, it connects the speed controllers to the motor, as described by Eric Smith, one of the governor's school students and member of Group One.

Once the rover can move smoothly, an arm will be attached to the top in order to extend and grab the trusts created by Xuan Nguyen, a volunteer and competitor in Group Two.

The quarter-scale trusses are fairly basic structures made of wooden sticks, plastic cardboard, and hot glue. It is half a meter tall with a triangular base.

Group One has nicknamed their rover ChurroMobile with an arm by the name of Gal-GaBot. ChurroMobile was moving quite successfully by the third week and will have the arm attached very soon.

Featured below is Cameron Fazio, another governor's school student. In this video he is proving the strength and mobility of their rover by grabbing onto it and having it pull him in a rolling chair throughout the halls of the Autonomy Incubator.

Group Two is also making great progress in the competition. Their rover goes by the name of Ironbot with an arm named RobotDowneyJr.

According to Group Two member Billy Smith, the easiest part of the process for him thus far was "understanding the project itself and what to do, but ordering the parts and using older technology came as a slight difficulty, but we have managed to do just fine."

The end of the competition is just around the corner and the champion group will be announced soon after evaluation. Both groups have been working very hard in hopes of winning and eagerly award the upcoming Rover-Off. So, stay tuned!

|

| Cameron Fazio and Eric Smith prepare their rover, Churromobile, for a test drive. |

The goal of the competition is to successfully build a mobile rover in order to mount a 25-pound robotic arm on the top. This arm will be capable of moving a 7-8 pound wooden truss; however, the rover must be stable and not tip when the arm extends.

|

| The interior of ChurroMobile prior to adding hinges and a flat base to prop the arm on. |

Then, each team had to wire up all of the electronics on the rover. Luckily this was quite simple since the controller board that is being used has several components in one. For example, it connects the speed controllers to the motor, as described by Eric Smith, one of the governor's school students and member of Group One.

|

| Eric Smith started working with robotics in seventh grade and has continued working with them for nearly five years, as he will soon begin his senior year this fall. |

|

| "In our case, the electronic wiring is relatively simple," Eric stated after several years of experience in clubs at school and competing in robotics competitions. |

The quarter-scale trusses are fairly basic structures made of wooden sticks, plastic cardboard, and hot glue. It is half a meter tall with a triangular base.

|

| Xuan built the trusts out of light material with assistance from one of the other volunteers, Ian Fenn. |

Featured below is Cameron Fazio, another governor's school student. In this video he is proving the strength and mobility of their rover by grabbing onto it and having it pull him in a rolling chair throughout the halls of the Autonomy Incubator.

Group Two is also making great progress in the competition. Their rover goes by the name of Ironbot with an arm named RobotDowneyJr.

According to Group Two member Billy Smith, the easiest part of the process for him thus far was "understanding the project itself and what to do, but ordering the parts and using older technology came as a slight difficulty, but we have managed to do just fine."

|

| Billy Smith, governor's school student, working on part of the base for Group Two's rover. |

|

| Ian Fenn attaching the wheels to the flat base of the rover. |

Monday, July 17, 2017

2017-07-17: Autonomy Incubator Makes Historic OWLETS Flight Over Chesapeake Bay

|

| The Ai's Jim Nielan and Danette Allen were present for CBBT ops along with Ryan Hammitt, Eddie Adcock, Mark Motter, and Zak Johns. |

The OWLETS (Ozone Water-Land Environmental Transition Study) mission officially began with its maiden voyage over the Chesapeake Bay this morning, making the Autonomy Incubator and NASA Langley's earth scientists the first team to ever measure ozone levels directly on the land-water transition. Hive Three, one of our four Hive vehicles, carried an ozone monitor and four CICADA gliders from the Naval Research Library to take meteorological data.

Today's event was only the first of many OWLETS flights, all of which will take off from the third island of the Chesapeake Bay Bridge-Tunnel (CBBT).

In addition to Hive-3 and its suite of sensors, NASA Langley also deployed a weather balloon and a lidar trailer to study atmospheric composition near the land-water transition.

|

| The lidar trailer uses two high-powered lasers to determine atmospheric composition. |

|

| The weather balloon also takes ozone and meteorological data, but follows wind patterns instead of flying a set route. |

Stay tuned for more in-depth coverage as this historic mission continues! Who knows, maybe our social media intern will be out there reporting from the field sometime soon. Until then, check out this video of one of the final practice missions, which we flew right here at NASA Langley:

Friday, July 14, 2017

2017-07-14: Autonomy Incubator Highlighted in Acting Administrator Robert Lightfoot's Centennial Address

Flip to minute 3:18:27 of the NASA Langley Centennial Symposium to hear NASA Acting Administrator Robert Lightfoot give the Autonomy Incubator a shout-out during his speech!

"And speaking of autonomy," he said, "the researchers here at [NASA] Langley's Autonomy Incubator are developing and testing algorithms and robotic systems that represent a step toward the safe operation of autonomous drones in aerospace." It's always nice to hear we're doing something that can be a source of pride for the American public, especially from the NASA Administrator.

Thursday, July 13, 2017

2017-07-13: Autonomy Incubator Welcomes Social Media Volunteer Payton Heyman

|

| Payton edits pictures for a blog post. |

In addition to the passel of high school students volunteering on the research side of the Autonomy Incubator this summer, the Ai has also enjoyed the hard work of a social media volunteer. Payton Heyman, a rising senior at Hampton Roads Academy, has joined us for two weeks of her summer to help run the Ai social media empire.

Although Payton will be the first to tell you she's loved computers for many years– "I used to make PowerPoint presentations for fun when I was little," she said– her time in the Ai has provided a chance to explore a new realm of ideas.

"I didn't know what to expect," she said. "It's cool learning about actual robots and NASA stuff."

"Everyone here has been super welcoming," she added.

|

| Payton took her first trip to the Back 40 today to document some outdoor test flights. |

An avid filmmaker who intends to go to college next year to pursue a film degree, Payton has found a place to learn new skills and even draw creative inspiration in her work for the Ai.

"I love editing videos; I think it's so much fun," she said. "[The work I'm doing here] is giving me inspiration for the stuff I wanna do in my own short films."

In fact, we'll continue to see Payton as a contributor to Ai social media in the future: she likes it here so much that she intends to keep volunteering during the school year as her busy senior-year schedule allows.

"I want to come back!" she said.

Tuesday, July 11, 2017

2017-07-10: Autonomy Incubator Welcomes Crime Author Patricia Cornwell

The Autonomy Incubator is a popular stop for visitors to NASA Langley. Over the years, figures such as the mayor of Newport News, the Flight Commander of the First Fighter Wing from joint base Langley-Eustis, and even former NASA Administrator Charlie Bolden have come by Building 1222 to see our UAVs dodge trees and deliver 3D printed bananas. Today, we flew and Danced-With-Drones just like any other tour day in the Ai, but this time, the audience was international best-selling author (and avid helicopter pilot) Patricia Cornwell.

Patricia Cornwell arrived punctually at 9:30, still clad in her flight suit from landing her Bell 407 JetRanger on-Center just minutes before.

|

| Danette Allen and Patricia discuss some of the challenges facing autonomous flight. Power lines and moving ground vehicles, for example, are difficult obstacles to detect and avoid, even for humans. |

"I don't know if you remember, but we've met," Ai lab head Danette Allen said. Patricia toured Danette's previous lab, a virtual reality rapid-prototyping initiative, before Danette started the Autonomy Incubator.

"I do! It was October twenty-second, 2007," Patricia responded. "You know how I know? I found it in my journal."

|

| Danette Allen and Kerry Gough walk Patricia Cornwell through a VR crime scene in the Mission Simulation Lab (MiSL) at LaRC in 2007 |

|

| "I have carbon fiber envy," Patricia said during her tour of the Hive vehicle. |

|

| Danette explains how CICADA gliders navigate autonomously. |

Patricia asked a slew of questions about everything from obstacle avoidance to the nature of autonomy, relating some of her own experiences as a helicopter pilot and the things she'd seen on tours of other NASA centers. In addition to recording the entire visit for her own use, she took copious notes in a small orange notebook, jotting down everything that caught her interest– including the name of the Ai's management support assistant, Carol Castle. Perhaps we should keep an eye out for Carol's name in any future Kay Scarpetta books?

After a thorough introduction to the Ai and the work we do, Danette brought Patricia into the flight area to meet the team and see our research in action. First up was Ben Kelley, presenting our classic Dances with Drones object-avoidance demo.

|

| Patricia was especially interested in how we track Ben's location through fiducials on his hat. |

Next, Ben and Loc Tran demonstrated the 3DEEGAN object detection and classification system.

"I hope it sees me as a person and not an alien," Patricia joked. Don't worry, it did– although we'd like to think that if a friendly extraterrestrial landed at NASA Langley and wanted a tour of the Ai, we would happily oblige. We are a space agency, after all.

|

| Patricia compared browsing our wide selection of custom UAVs to being in a Ferrari dealership. We think they're pretty cool too. |

Finally, we closed the day by flying a miniaturized version of our package retrieval mission– always a crowd-pleaser.

|

| Ben explains the visual odometry running onboard the vehicle to detect the package. |

Today's visit was so successful that we might expect a return visit from Patricia Cornwell in the future. With her infectious enthusiasm for all things aviation, she's welcome at the Ai anytime.

Wednesday, June 28, 2017

2017-06-28: Autonomy Incubator Successfully Tests New Multi-Vehicle Interface

Months of work paid off this week when the Autonomy Incubator's Kyle McQuarry took off and landed two UAVs at once with just a push of a button. Known as MUCS, for Multi UAV Control Station, Kyle's interface promises to simplify the Ai's multi-vehicle flight missions by consolidating the controls for all the vehicles onto one screen.

"Basically, I can select one or more UAVs and send the same command to them at the same time," Kyle said of his control station. "When you're trying to control multiple UAVs, it's easier this way– it's more time critical than anything." Of course, you can still control one at a time if you want to.

Think about it: flying multiple vehicles with individual controls would be a logistical nightmare. There would be no way to ensure all the vehicles got the exact same command at the same time, and managing all the controllers at once would take multiple people. With the MUCS at our disposal, now the Ai can expand its research missions to include as many vehicles as anyone could possibly want.

"[MUCS] allows you to scale up to n-number of vehicles because realistically, if you're flying one hundred vehicles, you won't have one hundred controllers," Kyle said.

|

| The user interface for MUCS. Note the Ai logo tastefully watermarked into the background. |

"In general, we're still talking about what features we're going to add," he explained. "Right now, we're thinking about a map view, where you'll see the vehicles and maybe their trajectories." Other proposed features include using the tablet to set waypoints and draw no-fly zones right onto the map.

Because of its ease of use, MUCS has the potential to make multi-UAV missions accessible to people who might not have computer science or aviation experience. While Kyle designed the control station for Ai use, he adds that his creation's broader implications are "a nice side effect."

Thursday, June 22, 2017

2017-06-22: Autonomy Incubator Intern Kastan Day Takes Up 3DEEGAN Mantle

|

"Yeah, this is pretty different," he said, pecking at the command line on his computer. With only a year of college under his belt, he's hitting the ground running as an intern. "Progress in the real world is way different than progress at school," he added.

Kastan's work this summer follows the work that Ai member Loc Tran and intern Deegan Atha did last summer, a computer vision and deep learning effort playfully named 3DEEGAN. Here's a helpful video Kastan made on the subject last summer:

While the Ai's computer vision work so far has focused on letting UAVs identify objects in real time— recognizing a tree in the field of view and changing course to avoid it, for example— Kastan is taking a more targeted approach. He's developing a system of unique markers that the computer vision algorithm can recognize instantly, sort of like a barcode at the grocery store. The cash register doesn't have to visually recognize your Twix bar based on its size, shape, and features; it just scans the barcode and matches the pattern up with the one that represents "Twix bar" in its library. By applying unique markers of a known size to objects in the UAV's field of vision, things like identification, calculating distance, and determining pose become much, much easier.

|

| Kastan holds the webcam up to a screen full of markers to test if the computer vision algorithm recognizes them. |

|

| What the machine sees is in the window on the left. See the green outlines around all the markers? The algorithm works! |

Now, to make sure his system is as efficient and as accurate as possible, he has to determine what kind of grid to use when he generates the markers.

"The three-by-threes give a lot of false positives, but the eight-by-eights are hard to identify quickly," he explained. "We're looking for a solution in between."

To do the time-consuming work of printing out and testing each kind of grid, from three-by-three to eight-by-eight, Kastan has enlisted the help of the Ai's three high school volunteers. This summer's class of volunteers includes Ian Fenn from York High, Dylan Miller from Smithfield High, and Xuan Nguyen from Kecoughtan High. Their job entails printing out a test sheet of markers and then moving it around in front of the camera to see how it behaves.

"We're seeing how many times they're identified and how many times we get false things," Ian said. "We're also seeing if the larger patterns are more easily identifiable than the small ones."

|

| Ian, left, and Dylan, right, move a sheet of five-by-five grid markers farther away from the camera rig to see at what distance the algorithm stops identifying them. |

"I'm more into engineering, but I also like computer science," she said. "My uncle Loc said every engineer needs to learn how to code."

|

| Xuan takes notes about each grid's performance at different distances. |

Thursday, June 15, 2017

2017-06-15: Autonomy Incubator Intern Javier Puig-Navarro Expands Collision Avoidance Algorithm

|

| Javier explains non-Euclidian geometry to me, someone who had to take Appreciation of Math in college because I didn't make the cut for calculus. |

UIUC PhD candidate Javier Puig-Navarro is the professor of the Autonomy Incubator's summer intern squad. Over the four years he's returned to the Ai, he's become the go-to person for everything from math to physics to MatLab questions because he's such a clear, patient teacher. Today, when Javier called me over on my way to the coffee pot, I knew I was about to do some serious learning.

"So, you remember my GJK algorithm that I started working on last summer, right?" he said.

"Yeah, Minkowski Additions and stuff," I replied, eloquently.

Javier's work at the Ai concentrates on collision detection and avoidance, using polytopes and a tricky bit of math called the Minkowski Addition to determine if two UAVs will collide. If the polytopes representing the two vehicles intersect, then that means they're on a collision course. It's completely explained in the blog post from last summer, which I'll link again here.

Javier's algorithm will also move one of the shapes so that they no longer overlap, thereby avoiding the collision. When it does this, he wants to make sure his algorithm chooses which direction to correct a collision in a truly random way— not favoring displacing the polytope upwards over displacing it sideways, for example. It's called removing algorithm bias, and Javier assures me it's very hot right now.

As he works on correcting his algorithm bias, Javier needed a way to visualize distance and direction, to make sure his algorithm is choosing completely randomly. To do that, he built a program that creates 2D and 3D balls of a given circumference— what he called me over to take a look at. There's about to be a lot of math happening, but it's cool math! Ready?

This sphere and circle are generally what we think of when we hear the word "ball." They're defined by Euclidean distance— each of the points on the circumference is the same distance away from the center point if you use this formula:

This is the geometry you learned in ninth grade. However, it is by no means the only definition of a mathematical "ball." Look at this other visualization from Javier's algorithm.

Euclidean norm

This is the geometry you learned in ninth grade. However, it is by no means the only definition of a mathematical "ball." Look at this other visualization from Javier's algorithm.

This diamond thing is also a ball, according to math, because all of the points are equidistant from the center when you calculate them this way instead of the Euclidean way:

Sum norm

As you can see, this is called the sum norm, or norm 1. The Euclidean Norm, predictably, is called norm 2. So, norm 1 is a diamond and norm 2 is a sphere. There is also an infinity norm, and it looks like this:

A cube! That's also a ball in this case, if you calculate the distance from the center like this:

Infinity norm

That's the three main norms: 1, 2, and infinity. However, there are infinite norms between those norms. Like, what would norm 3 look like?

A cube with rounded edges, because it's on the spectrum between a sphere (2) and a cube (infinity). So really, distance can be visualized in infinite ways, on a sliding scale from diamond to sphere to cube. Isn't that the wildest?

"So, you need all of these to get rid of your algorithm bias?" I asked.

"No, I actually only need the 2 norm," Javier said. "I just thought this was a good conceptualization for you."

The Autonomy Incubator and Javier Puig-Navarro: bringing you a college-level math lesson to go with your regularly scheduled UAV content. Thanks, Javier!

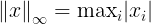

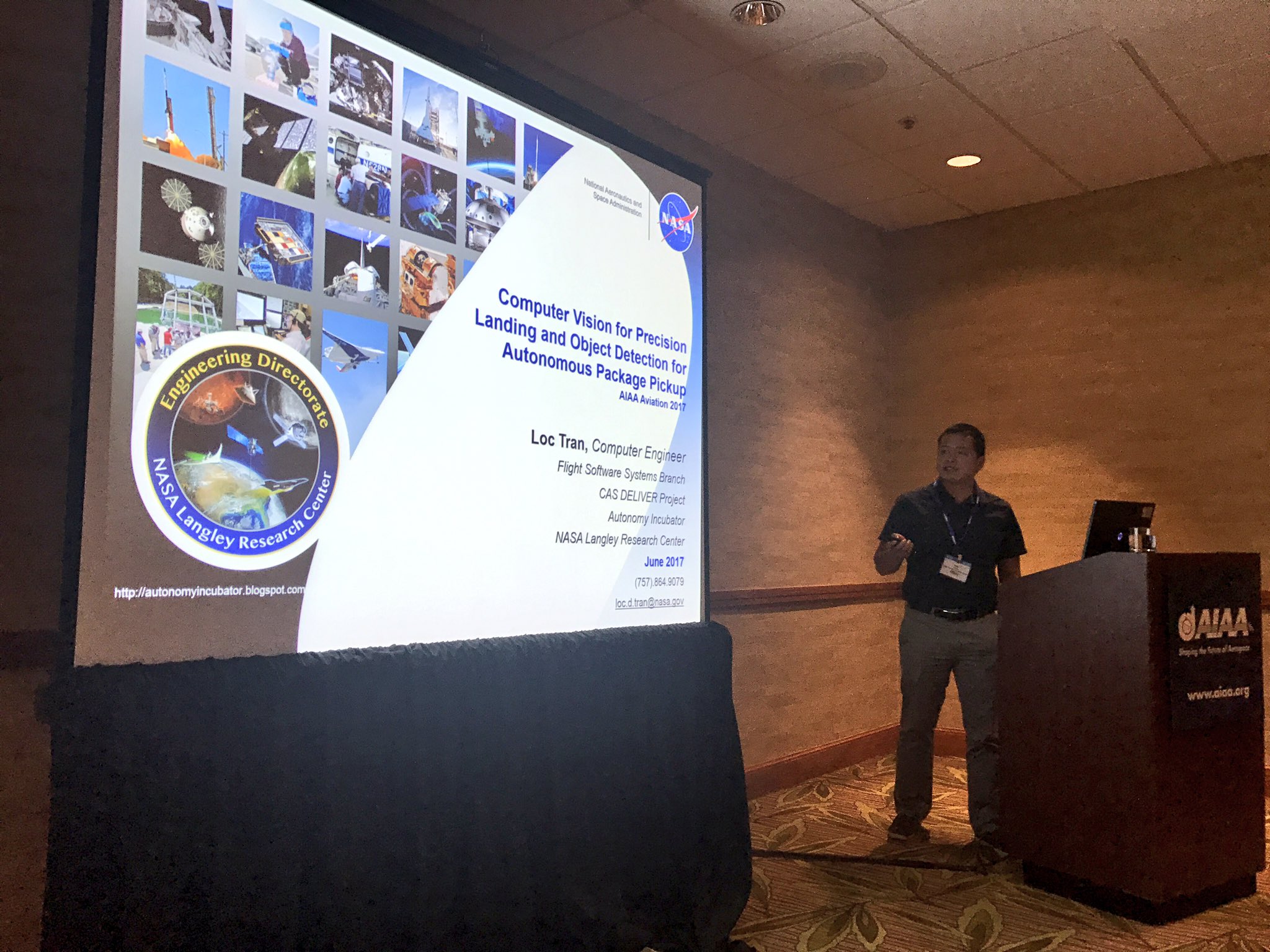

2017-06-07: Autonomy Incubator Wows Again At AIAAAviation Special Session

|

| The squad celebrates another successful year at AIAA Aviation. |

If AIAA Aviation were the Super Bowl, the Autonomy Incubator would be polishing several rings right now. The success we had in our first special session at Aviation 2015 led to us receiving even more exposure for our smash hit presentations at Aviation 2016, and at Aviation 2017 last week, the hype continued to build-- even if our papers weren't named after Star Trek references this time. Our team presented on the full breadth of the Ai's research, from human-machine interaction to time-coordinated flight, and dominated Wednesday's special session on autonomy.

Dr. Danette Allen, Ai head and NASA Senior Technologist for Intelligent Flight Systems, gave the Ai's first presentation of the week on Wednesday morning during the Forum 360 panel on Human-Machine Interaction (HMI). She presented on ATTRACTOR, a new start that leverages HOLII GRAILLE (the Ai's comprehensive mission pipeline for autonomous multi-vehicle missions) and seeks to build a basis for certification of autonomous systems with trust and trustworthiness via explainable AI (XAI) and and natural human-machine interaction. Given the proposed emphasis on human-machine interaction HMI and "trusted" autonomy, we are excited to begin working ATTRACTOR (Autonomy Teaming & TRAjectories for Complex Trusted Operational Reliability) in October 2017.

|

| Danette Allen at Aviation 2017 |

|

| Jim Nielan |

|

| Loc Tran |

|

| Javier Puig-Navarro |

|

| Meghan Chandarana |

|

| Erica Meszaros |

|

| Natalia Alexandrov |

Subscribe to:

Posts (Atom)